Mass web hosting is a popular way to host web sites. Lower costs, easy site creation, and convenience lure site owners to host with the likes of HostGator, GoDaddy.com, and Yahoo.com. The problem with mass hosting is just that, a massive amounts of sites on overloaded servers. With sometimes hundreds of sites on a single web server, your site can suffer slowdowns when another site on the same server gets hammered. Often the best approach in this situation is to move to a new host with more lightly loaded servers. This article shows the effects of such a move for a client on a mass web host.

The Problems with Mass Web Hosting

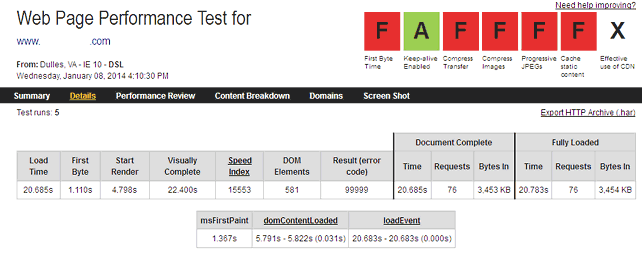

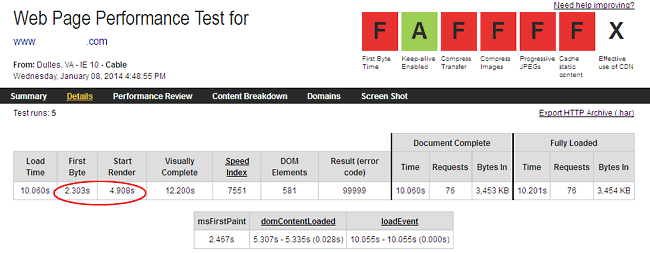

Our example site was hosted on a mass web host. Not surprisingly, the client reported slow and inconsistent response times. A brief performance analysis found a large (3.4MB) home page with 76 requests (see Figures 1 and 2).

Slow and Inconsistent First Byte Times

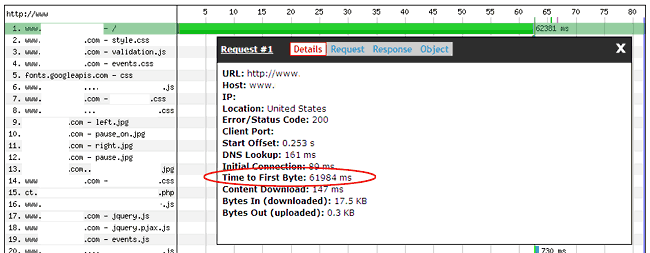

First byte times ranging from 1.02 to over 60 seconds on a DSL connection. Digging into the slowest TTFB result showed that the DNS lookup, the initial connection, or content download times weren’t the problem, it was the 61+ second TTFB (slow server response, see Figure 3).

Other Issues for Slow Websites: Crawl Errors, Lower Conversion Rates, & Poor Attunability

Slow web sites can cause a host of other problems (no pun intended). Slower sites can spawn crawl errors with search engines as they struggle to index your site. Crawl errors can reduce the number of pages indexed in your site, reducing your potential traffic and keyword rankings. We found crawl errors with the original host. Conversion rates and attunability can also suffer with slower pages. In summary mass hosting can cause:

- Slow response times, especially TTFB

- Slow start render times (slow TTFB delays start render times)

- Inconsistent response times – lowers attunability (Ritchie & Roast, 2001, Roast 1998)

- Crawl errors – fewer indexed pages

- Lower conversion rates (Akamai 2010, Tagman 2012)

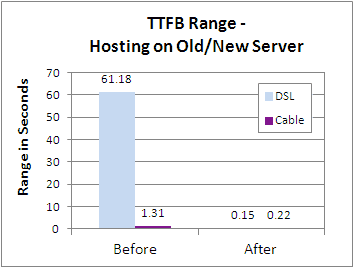

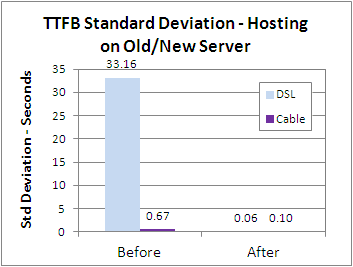

- Lower search engine rankings (Google factors speed into rankings)

The Solution: Moving to Lightly Loaded Host

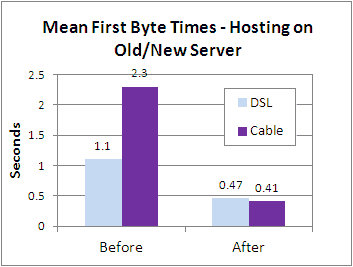

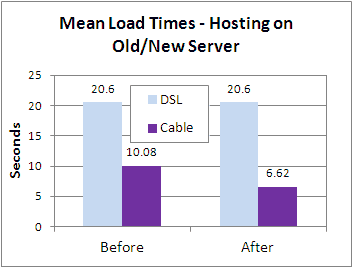

After moving the site to a more lightly loaded shared server environment, first byte times were reduced and more importantly made more consistent. Mean cable first byte time was reduced by 82.1% from 2.3S to 0.41S (see Figure 4). Figure 5 shows that the range of server response times was reduced from over 61 seconds to 0.15 seconds for DSL connections after the move, and 1.31S to 0.22S for cable. Figure 6 shows that first byte time variability (measured by standard deviation) dropped from 33.2S on DSL to 0.06S, and from 0.67S to 0.1S on cable. In other words variability dropped by 6.7 times (for Cable) to over 500X smaller for DSL (i.e., much more attunable). Mean Cable load times also improved by 34.3% (see Figure 7), although DSL load times remained the same.

Room for Improvement

While first byte times improved significantly, and load times improved at least for cable there is still more work to do here. Load times of 20 seconds for DSL and 6.6 second for cable connections are well above HCI guidelines of 2-4 seconds (King 2008, Akamai 2009). In our next installment we’ll address optimizing the content automatically to see what effect this will have on load times.

Further Reading

- Akamai Reveals 2 Seconds as the New Threshold of Acceptability for eCommerce Web Page Response Times

- 47% of users expect a page to load in 2 seconds, and 40% will abandon a page after 3 seconds. Akamai, 2009.

- Install mod_pagespeed

- Installing mod_pagespeed can make significant improvements in web page performance. In our tests, web page start render times improved by 17% to 43% and load times improved by about one-third. However, while mod_pagespeed improved load times, it is no substitute for manual website optimization.

- New Study Reveals the Impact of Travel Site Performance on Consumers

- This study showed improved conversion rates for faster travel sites. Akaimai, 2010

- Ritchie and Roast, “Performance, Usability, and the Web,”

- in Proceedings of the 34th Hawaii International Conference on System Sciences 5 (Los Alamitos, CA: IEEE Computer Society Press, 2001).

- Roast, “Designing for Delay in Interactive Information Retrieval,”

- Interacting with Computers 10 (1998): 87104. Introduced the notion of attunability, in that users “attune” to the delay of an interactive system. Raw response times are not enough they posit, the consistency of response times is important so users can “attune” or get used to a certain range of response times. The tighter the range (lower standard deviation) the more “attunable” the system will be.

- Just One Second Delay In Page-Load Can Cause 7% Loss In Customer Conversions

- Found that conversion peaked at 2 seconds and dropped 6.7% for every 1 second of further delay. Tagman Blog, March 2012.